Generative AI and parliamentary TA - How can responsible use be achieved?

Pauline Riousset, Steffen Albrecht, Christoph Kehl, Johanna Mehler, Christine Milchram, Bernd Stegmann | 11 December 2025

For many of us, the introduction of ChatGPT three years ago was a disruptive experience. First, there was a sense of astonishment, which was quickly followed by uncertainty. What can generative AI really do – and what consequences will this have? How is knowledge work changing? And what does this mean for the TAB as an advisory body to parliament? Or, to quote a previous TABlog post that took a "glimpse into the cooking pot of digital technology assessement methods": Are we facing a kind of “Thermomix” of TA methodology that not only requires new recipes, but also questions the role of the cook?

As one of the first parliamentary TA institutions, TAB was commissioned by the German Bundestag in early 2023 to conduct a study on the implications of large language models. As a result of major innovations in natural language processing, language models became broadly available at low cost, with potentially far-reaching consequences. The TAB study presented the fundamentals of the technological breakthrough in language processing and described the new possibilities and possible developments. In particular, the report shed light on a range of potential uses and applications, e.g., in the field of education and research. At the same time, the systemic limitations and possible negative effects of the new generative AI systems were discussed.

It quickly became clear that generative AI would not only continue to be a subject of investigation for TAB, but could also transform our own way of working. Generative AI has the potential to fundamentally change knowledge work and, in particular, policy advice in the field of technology assessment – in both a positive and negative sense. The technology offers opportunities, for example in accelerating research processes. At the same time, there are risks, such as reducing the diversity of perspectives and an increased dependence on technology providers who pursue commercial interests and whose values do not necessarily coincide with those of independent research.

Our activities at TAB: from conceptual work to practice

In order to explore potential applications of generative AI for our work and with the aim of using AI applications responsibly, safely, and reflectively, the internal “TAKI” working group was formed at TAB in spring 2024. Since then, it has been systematically experimenting with different AI applications and discussing and evaluating the experiences gained in the process.

One of our recurring tasks is to assess the potential of generative AI for our work. As a start, we held internal workshops to identify specific use cases and weigh up their potential and risks. In addition, we have also surveyed other policy advisory TA institutions in Europe and the US

One of our recurring tasks is to assess the potential of generative AI for our work. To kick off our activities, we held internal workshops to design specific use cases and weigh up their potential and risks. In addition, we also surveyed other policy advisory TA institutions in Europe and the US about their ongoing projects and plans to use generative AI (link to poster paper from the DiTraRe symposium), authored publications on the the role of digital methods in TA , and organized events with other TA researchers and policy advisors. In recent months, we have participated in the following events, among others:

- TA24 conference in Vienna | Article (June 2024)

- NTA11 conference in Berlin (November 2024)

- EPTA practitioners meeting in Bern (October 2025)

- GlobalTA webinar (October 2025)

- DiTraRe Symposium | presentation, poster (December 2025)

Informal meetings are also part of the programme. In September 2025, for example, we discussed our findings from the application of AI and the development of guidelines with members of the AI working group of the Bundestag administration.

Fields of application for AI in TAB work

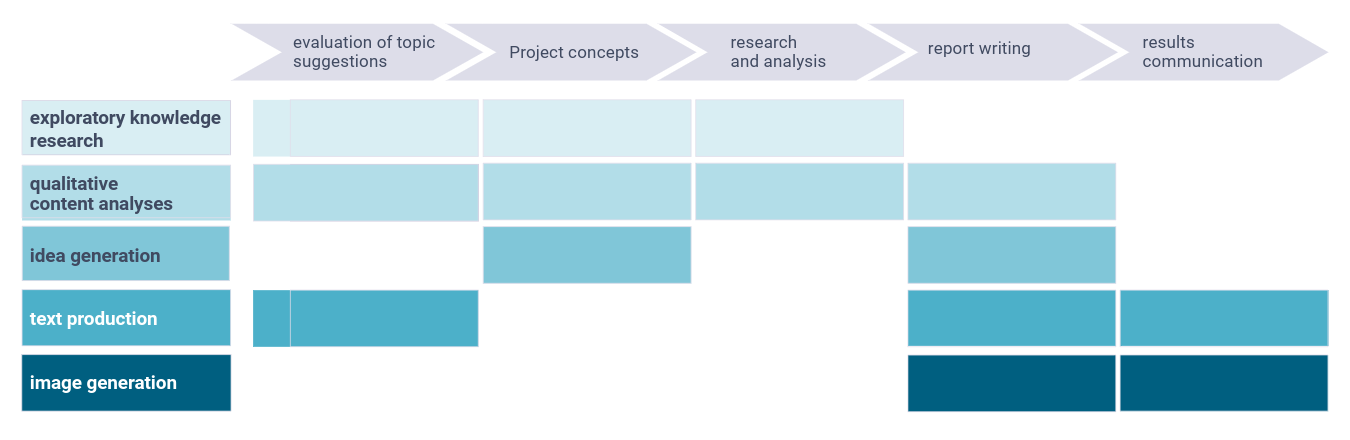

We quickly realized that large language models can offer potential for supporting our work in almost all phases.

We tested and evaluated particularly promising use cases for generative AI in pilot projects. For example, topics for trend analysis in foresight studies were identified and extracted from large amounts of text (social media, magazines, newspapers) using a local language model. Further examples include the development of specialized chatbots that can provide targeted support when researching new topics for TAB studies, and the development of a chatbot for science communication. In February 2025, a data scientist joined our team to provide technical support to our activities.

Room for experimentation and reflection

As a TA institution, we put emphasis on identifying the requirements for the responsible use of technology, specifically in parliamentary TA. To this end, a group of colleagues at ITAS who specialize in the evaluation of research processes support us in assessing and reflecting on our experiences. On this basis, we examine: Where is the use of genAI sensible and justifiable, where is it merely “nice-to-have,” and where is it counterproductive? After all, the added value varies greatly depending on the tool, application context, and timing (e.g., due to model updates or new use cases). When deciding whether to use AI tools, the added value in terms of content, efficiency gains, legal risks, and social and environmental impacts plays a central role.

Guidelines for the use of generative AI

Our experiences to date have shown us that, given the range of applications of generative AI in our work, but also the heterogeneous output qualities and complex effects on, for example, data protection and energy consumption, clear and specific guidelines are necessary. We have therefore reviewed the legal framework and existing guidelines from related organizations and developed specific guidelines for TAB's work. These are specifically designed for the use of AI in the context of the use cases we have identified and they take into account the special criteria of non-partisan scientific policy advice. In the guidelines, we address the various possible uses of generative AI, emphasize the need for quality assurance, and highlight the responsibility of authors. In addition, we address issues of copyright and data protection, as well as the need for transparent labelling and consideration of social and environmental impacts. As the tools are constantly evolving, we will regularly review and update the guidelines to ensure they remain up to date and practical.

TABlog as a forum for reflection on the responsible AI use

We will present our current guidelines for the responsible use of AI in parliamentary TA in more detail in one of the next TABlog posts. We will also discuss the use cases and other aspects of the use of generative AI in more detail here in the coming weeks. So stay tuned and feel free to share this post with anyone who might be interested! We also look forward to your feedback and the exchange of experiences!

Contact: info∂tab-beim-bundestag.de